Insights, Products

GPT-3: What is it, and what’s the hype about?

Insights, Products

The GPT-3 model has created a lot of buzz in the technology world. Experts have called it “amazing” and “impressive”, and some have even described it as “the most powerful language model ever built.” So, what’s GPT-3, and what’s all the hype about?

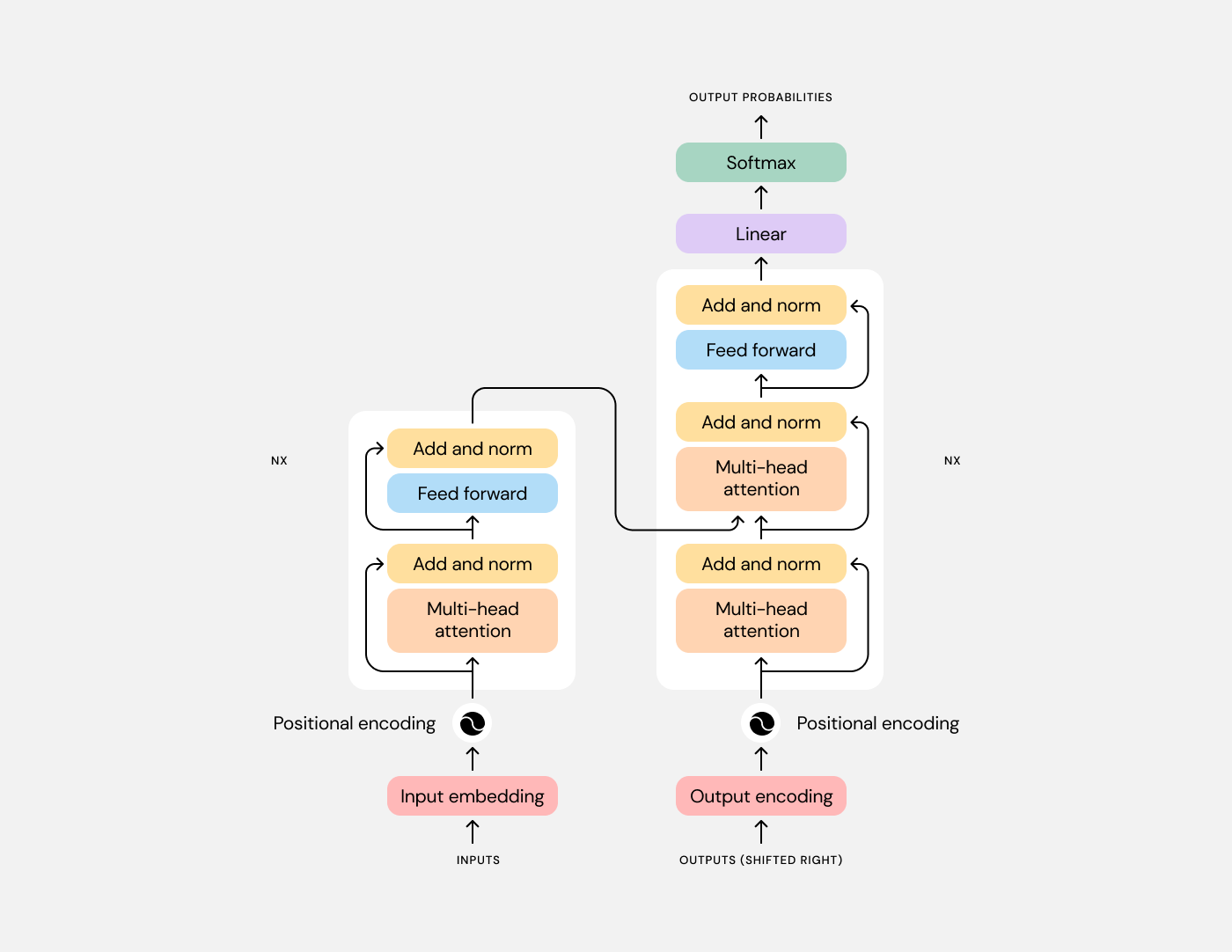

GPT-3 is short for third-generation Generative Pre-trained Transformer. It’s a machine learning language model that uses data from the internet to create text. OpenAI, a nonprofit from California, is behind the GPTs. In its third rendering from 2020, the GPT-3 model uses 175 billion parameters. In comparison, its predecessor only had 1.5 billion parameters.

Once primed by a human, it can come up with any type of text. With minimal human input, it’s generated creative fiction, working code, business memos, recipes, ads, and even academic research papers. No other language computer has ever come close to this.

This makes GPT-3 one of the most powerful AI-based language technologies in the world.

Simply put, GPT-3 is a very sophisticated text predictor. After receiving human text input, it delivers its best guess as to what the next part should be. It then takes the original input and the first text it’s produced and uses this as a new base to generate more output, and so on.

Its database is all text that’s available on the internet. Based on what GPT-3 finds online, it produces the content it considers statistically the most plausible to follow the original input.

GPT-3s take what humans give them and use their sophisticated neurological network architecture to transform it into a logical outcome.

“Transformers like GPT-3 learn how language works, how sentences are constructed, which kind of sentence follows the next sentence and how to leverage the current context,” says AI specialist Fréderic Godin, Head of AI at Chatlayer by Sinch.

In his experience, GPT-3 transformers produce highly accurate and grammatically correct texts.

However, as impressive as the new technology is, it also has its shortcomings.

While GPT-3s can come up with impressive texts, they have no internal understanding of their meaning. They lack semantic and pragmatic context, and therefore the ability to fully understand the input they receive and the output they deliver.

In addition, they can’t reason about a subject in an abstract way, and they also have no common sense. If they’re asked to talk about a concept they haven’t encountered before, GPT-3 models aren’t able to come up with a reasonable answer on their own.

Instead, they “invent” answers that they deem to be the most plausible, even if the responses are incorrect or nonsensical.

Let’s say you were to ask a GPT-3 about the soda prices of a telecommunication company. Of course, you know that the company doesn’t sell drinks, but you ask the GPT-3 anyway. Nevertheless, the GPT-3 would name a price that it considers plausible. This points to a common issue of GPT-3s: they have difficulties working with knowledge they don’t have.

This can be far more problematic than getting a wrong answer about sodas. In the past, GPT-3 has written insulting, racist, and sexist texts.

GPT-3 could even produce dangerous content. If a user asked a GPT-3 how to relax after a long day at work, based on online information, the GPT-3 could, without realizing it, recommend harmful medication.

“Unfortunately, there is no full control on the output of a GPT3.” Fréderic Godin, Head of AI at Chatlayer by Sinch

None of this means that GPT-3 isn’t an impressive new technology, but it also shows that it requires a lot more teaching and training for it to be fully functional in the real world.

However, GPT-3 already shows some promise as a search tool, and as assisting technology for conversational AI.

Search engines that work with artificial intelligence (AI) typically combine machine learning, conversational AI, and search algorithms. If you’ve ever interacted with smart virtual assistants like Alexa or Siri, you’ve used this type of technology.

Improve user experiences for online searches

Despite its shortcomings, GPT-3 shows how the future of search might look like. Rather than typing some carefully picked keywords, you can simply ask it a question and receive an answer – much more user-friendly than a list of search results!

Moreover, even if it does not know the answer, future generations of GPT3 or other Transformer-based AI models might browse the web to find the right answer.

Imagine a real estate website. Here, a customer could simply ask a chatbot to find them a three-bedroom house in Denver with a maximum price of 600,000 dollars. The chatbot would then use this input to search the site and show the best results.

Training NLP models

Another use case for GPT-3 could be to use it to train NLP models.

NLP (natural language processing) is the technology conversational AI bots use to understand human speech and text and reply like a human would. In order to train NLP models, you have to feed them with typical human expressions.

This sounds easy, but think for example of all the things we could say to order a pizza. Conversation designers have to teach NLP models as many of these expressions as possible to make sure that the chatbots will understand the customer’s pizza order. This takes time, and it’s nearly impossible to cover all possible expressions.

Chatbot designers could use GPT-3 to speed up this process and make conversational AI bots even more accurate.

Even though, at this point, these use cases are merely a glimpse at future possibilities, it will be interesting to see how GPT-3 will develop and influence other (conversational) technologies in the future.