Insights, Products

Lessons learned from building over 50 chatbots on 5 continents

Insights, Products

By now it’s clear: automated conversations can add value. As a customer, it’s much easier and more satisfying to communicate with a company the way you do with your friends and family. No one likes to listen to elevator music indefinitely while on hold or wait three days for a response to an e-mail.

If AI can recognize your question and resolve it or transfer it to the right person, the added value for both the customer and the business is huge. That’s why “going conversational” is the next step forward in the way companies engage and communicate with their customers to create positive experiences.

However, for a technology that has so much potential, I always wonder why there are so many crappy voice and chatbots. If you own a computer or a smartphone, then it’s safe to say that you’ve dealt with a chatbot that made you so frustrated, you wanted to throw your device out the window.

Bots are supposed to make people’s lives easier, not add problems, so why are a majority of people’s interactions with bots so frustrating? The technology is there; it’s the implementation that needs updating.

Over the last two and half years, I’ve built more than 50 chatbots for companies across all industries in Europe, Africa, Asia and the Americas. As with anything, there are always common mistakes that I see teams repeat when tackling a new chatbot project.

Here are four vital lessons that I learned from that experience. I promise you , if you stick to these lessons, you’ll not only build a chatbot that doesn’t suck, but you’ll build a bot that solves problems for your users and makes them feel heard and understood.

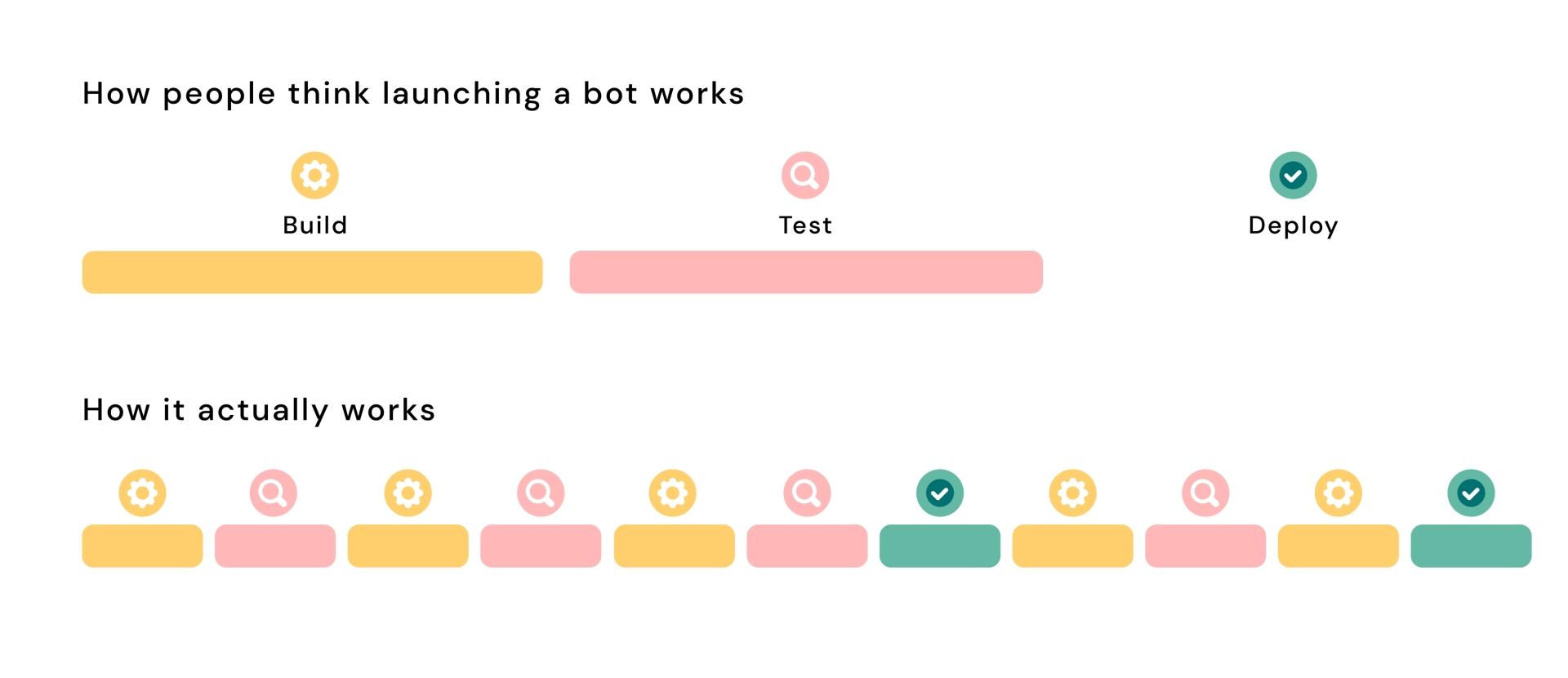

A chatbot project is really an AI project, but frequently it’s run like a traditional IT project. The best way to explain the difference is to compare building a chatbot to building a website.

A website has a predefined scope. The team agrees on its intended uses, designs journeys to match their objectives, and decides which buttons appear where and what happens when users click them. Users’ actions are finite.

Once version 1.0 of the website is out, the team tests the site. This includes making sure that everything works cross-device, or that the buttons lead to where they should. They then fix any bugs and go live.

Building a chatbot is an entirely different journey. When you build a chatbot, you start with a scope. The team agrees on an information architecture, defines the bot’s knowledge and responses, trains the natural language processing (NLP) model, and so on. However, you can’t define users’ journeys, because by giving them a keyboard, users’ actions become infinite.

A keyboard opens up the possibility for users to say literally anything and everything. This is the beauty and the potential of AI – instead of trying to understand how a website works and fall into a predefined journey, users define their own journey and experience with the chatbot.

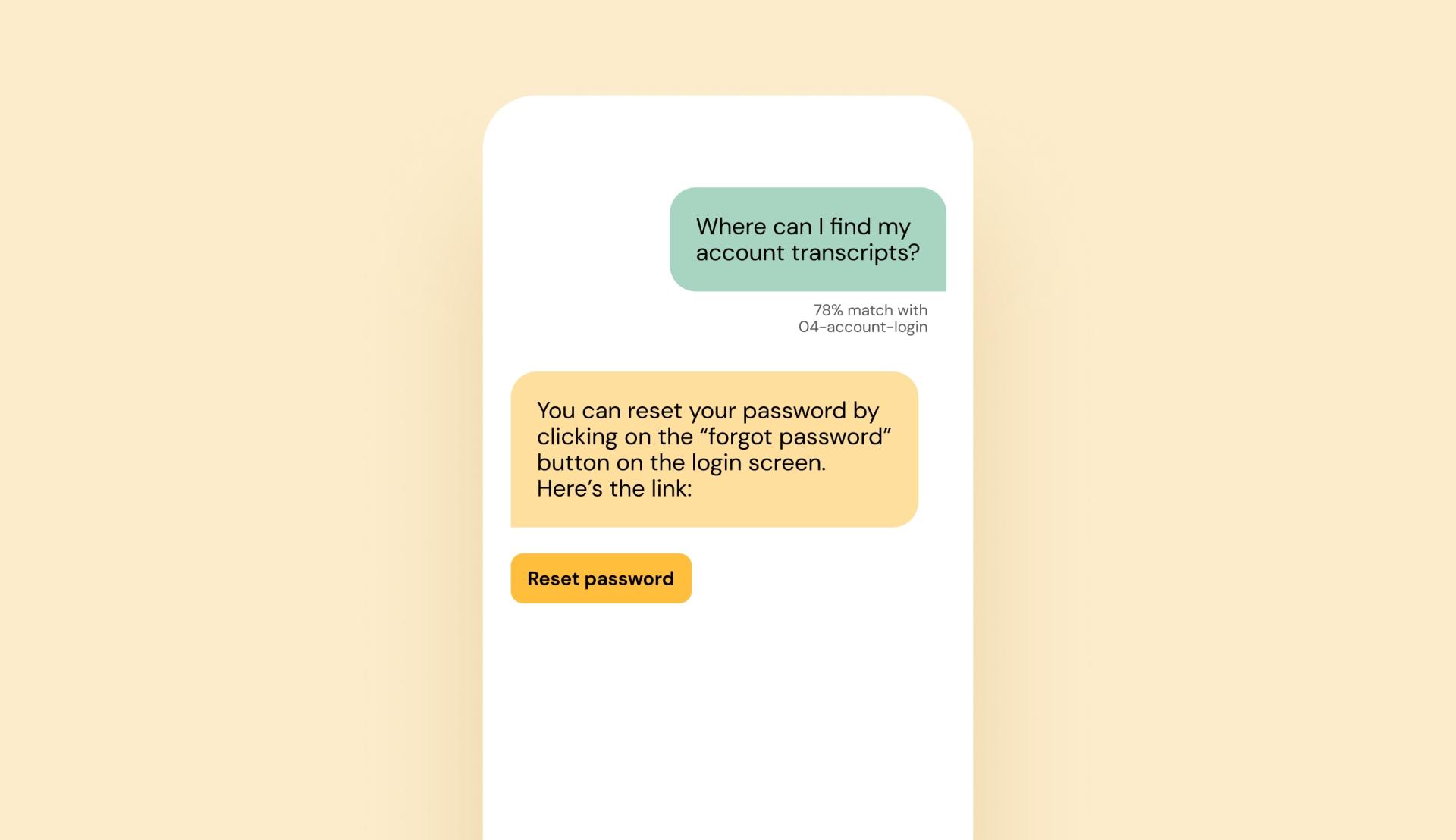

This means that even though we decide on an initial scope, people can still say whatever they want outside of this scope. And that can create issues that I refer to as “false positives” and “true negatives”.

False positives

Let’s say someone asks something that’s out of your scope. You can build your happy flows as much as you like, but when someone asks something that’s out of your scope, it can match an intent that you have in scope. Plus, the better and more robust your in-scope NLP model is, the higher the chances that your user gets a false positive.

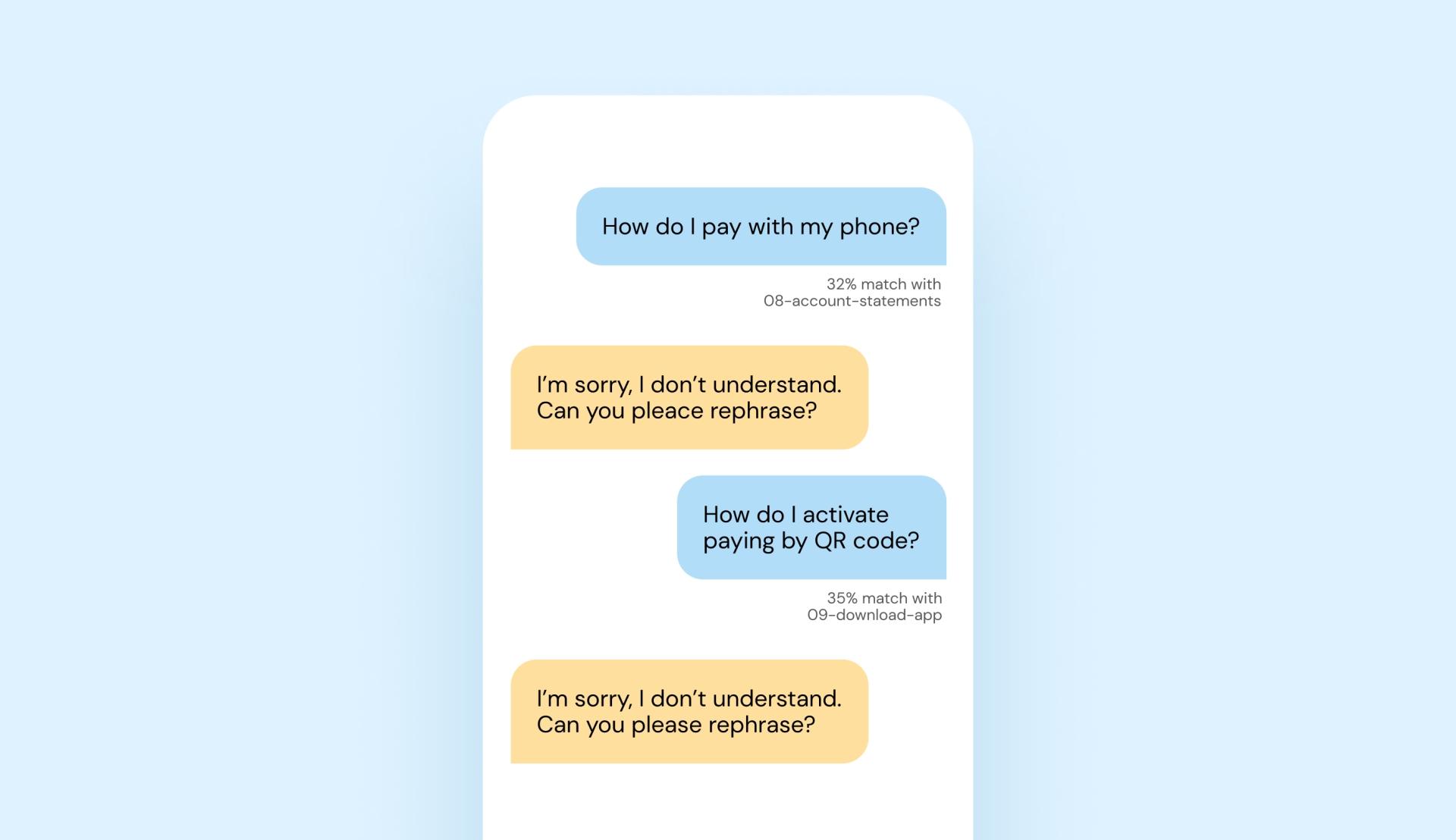

True negatives

When someone says something that’s out of scope and the bot doesn’t understand it, it’s called a true negative. In these cases, the bot typically responds with some sort of “not understood” sentence and asks the user to rephrase their question.

However, phrasing isn’t the issue and rephrasing the question won’t solve the problem, because the question is out of scope and considered a true not understood. The user and the bot then get stuck in a not-understood loop.

How can you avoid false positives and plan for true negatives when building a chatbot? First, create what I refer to as “99-intents”. These are intents that capture categories and intents that aren’t in scope. Their main purpose is to attract out of scope questions and define the correct next steps for users.

Second, make sure you correctly handle “not understood” scenarios. Avoid asking users to rephrase. Instead, focus on how you can get them to a solution as quickly as possible. Don’t worry, I’ll dive into that in lesson four.

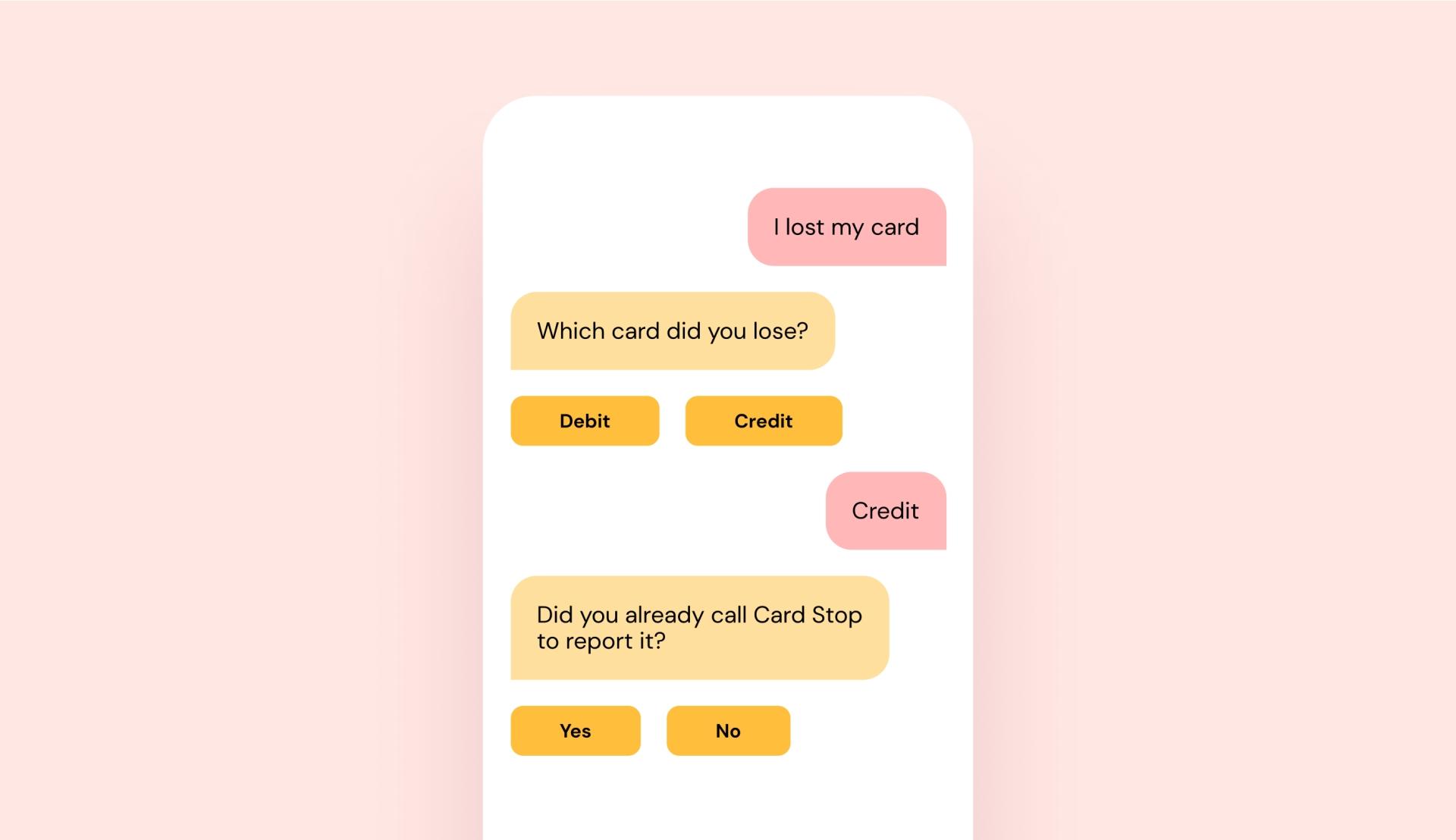

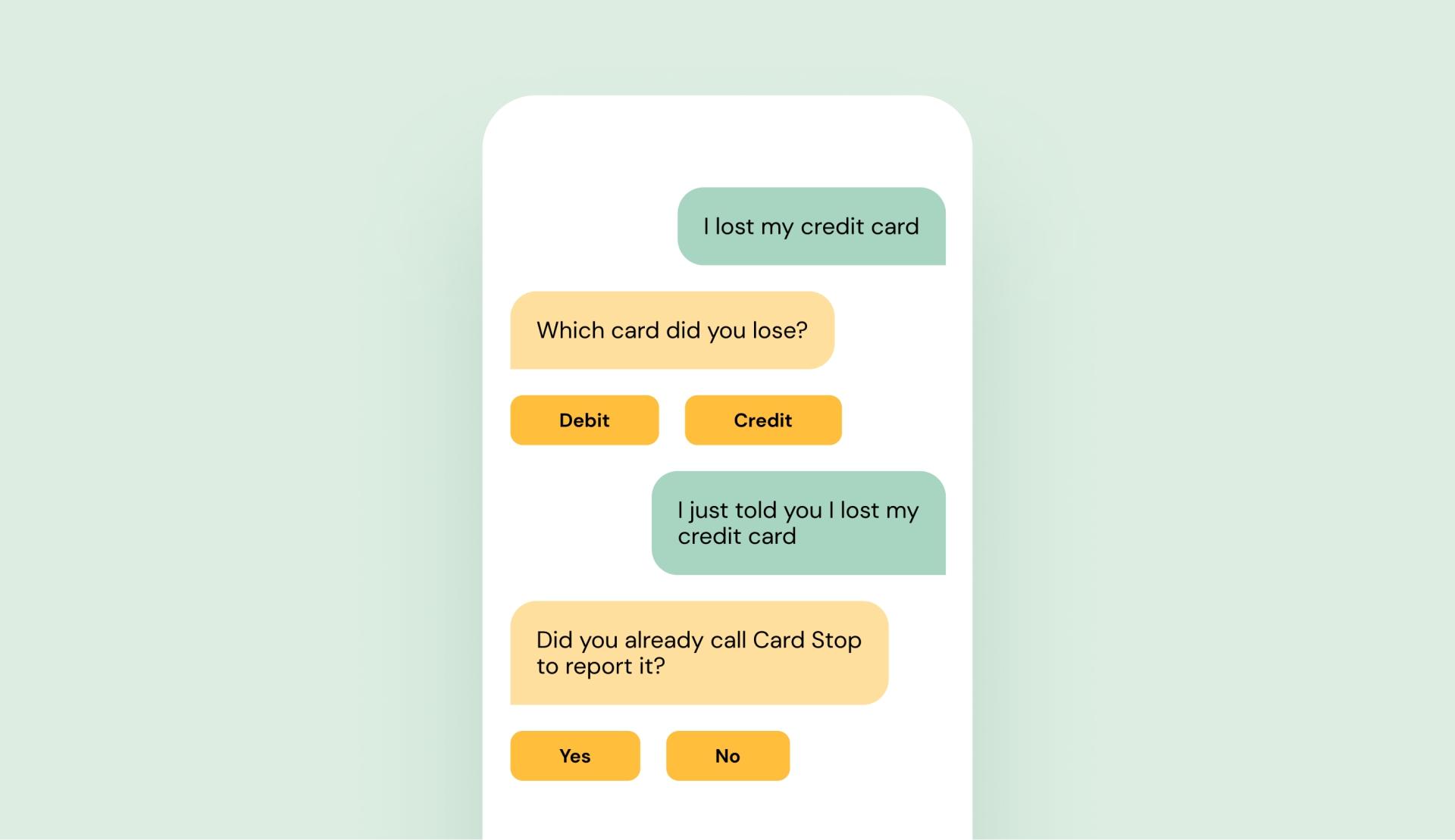

Let’s use a banking bot example for this lesson. A customer types that they lost their card and the bot asks, “which card: debit or credit?” The bot needs to know which card the user lost because the process is different depending on the card type. Great! Nothing bad has happened (yet).

Now, let’s look at a situation where another user says that they lost their credit card, and again the bot asks which card they lost. The bot correctly recognized the intent, but is asking for additional information that the user has already given. This makes the user feel like the bot didn’t properly understand them and now must repeat themselves (and no one likes to repeat themselves).

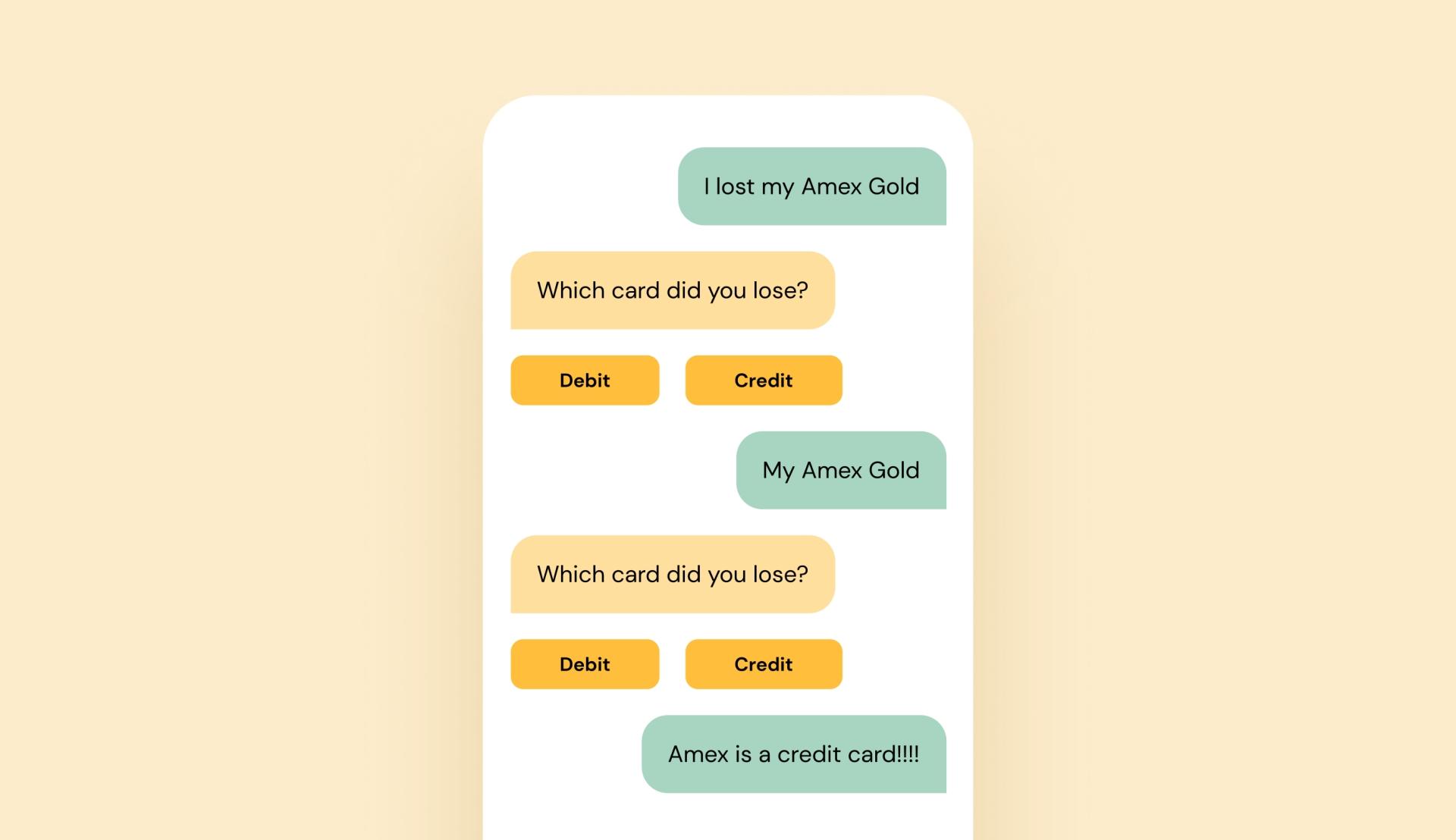

In a different case, yet another user types that they lost their Amex Gold. The bot follows up by asking which card, and the user repeats Amex Gold. The user is now stuck in a frustrating loop because this bot only expects “debit or credit” as an answer and doesn’t realize that Amex Gold is a credit card.

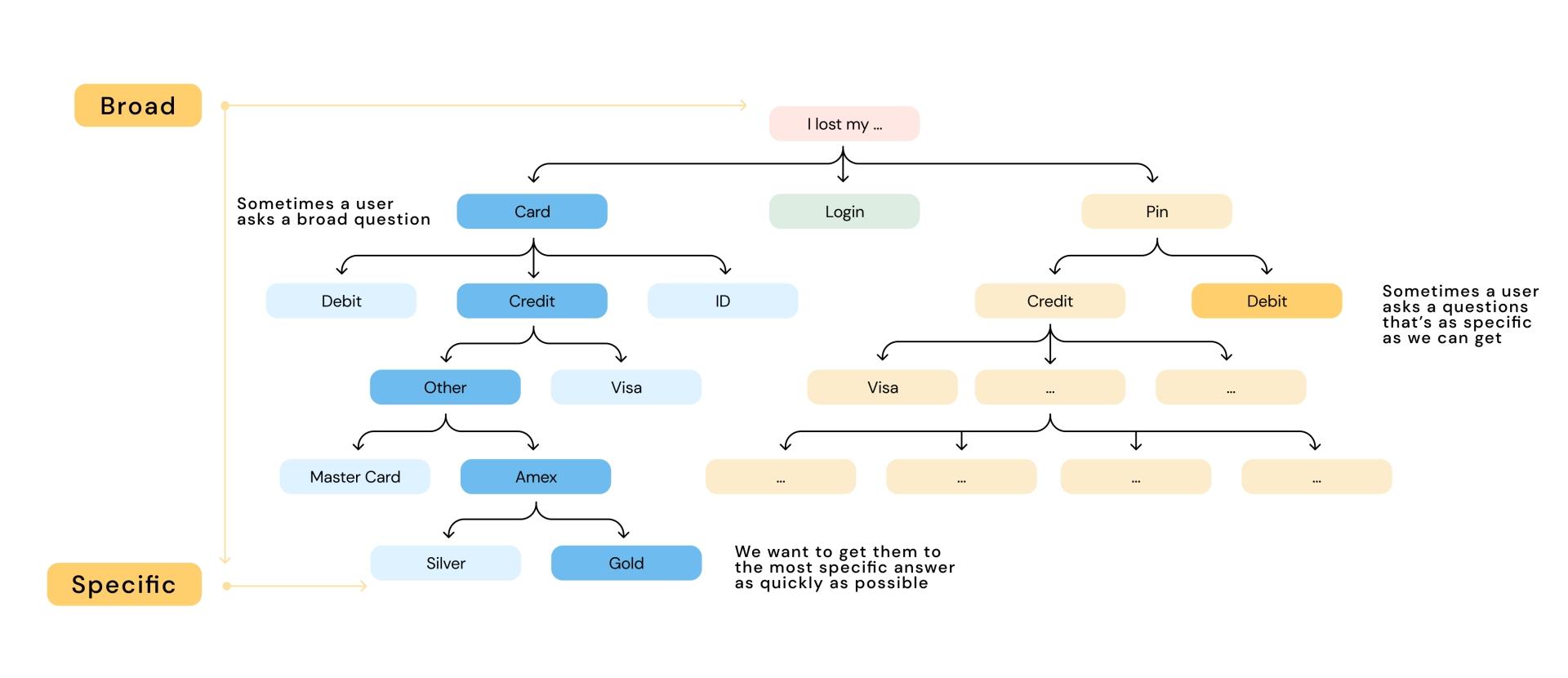

To make sure this doesn’t happen, you need to organize your content into a knowledge hierarchy. It goes from very broad to very specific.

When a user asks a question, we want the bot to give the most specific answer possible. For example, if someone says that they lost their Amex Gold, we don’t want the bot to ask them if they lost their debit or their credit card.

It’s this possibility to immediately narrow in on the specific that makes AI so useful. Not only can users talk the way they normally do, they feel heard and can immediately receive answers to their specific problems. Whereas, if this was a website, they’d have to click 1, 2, 3, 4, 5, or 6 times to access the Amex Gold information.

How can you provide access to the deepest level of information within a given intent? First, group all the similar intents and expressions together. Next, organize them from broad to specific and finally, use different intents and specific entities for recognition and figure out how specific the answer should be.

Keeping with banking, here’s an example that most banks in Belgium struggle with. We’re making an intent for users requesting a new version of this little device.

What would you call this? Most people would say “card reader”. Some say “bank device”, “pin machine”, “digipass”, etc. In Belgium, people also say ‘bakje’ or ‘kaske’ which means small tray or container. Some people don’t even use a specific word for this, they just say something like “the thing I use to pay online”. When you start interacting with your customers, you soon realize that everyone expresses themselves differently.

For us humans, it’s pretty easy to understand the term “the thing I use to pay online” because we have context and can quickly figure out what is meant. However, a bot only knows what you teach it, so you need to make sure that all these variations are part of your AI model when building a chatbot. This applies to individual words, but also sentences and situations. For example, when someone wants to express that their card is broken, they might say that while getting out of their car, they dropped their card in the door and closed it.

This brings up the next question: how far do you need to go with this? In the above situation, do you really want the bot to understand this example? If you add this to the AI model, you’ll have to include all kinds of words that have nothing to do with losing a card. And later on, as soon as someone mentions a car or a door, the AI will start thinking of a broken card. That might not be the result you want.

There’s always a tradeoff when adding words and expressions that are unusual and divert from more straightforward expressions, because you don’t want to take it too far. If a human would have trouble understanding what a person really means, there’s no way we can expect a bot to understand.

You should aim to train your NLP model to recognize 90% of the incoming user questions that are in-scope. Depending on the bot and scope, you can get up to a 95% recognition rate, but I typically never train above that since the remaining 5% are usually edge cases and exceptions, and better handled by human agents.

So, how can you anticipate all the creative ways users will describe an item or situation? It’s all about testing and optimizing. When you first launch your bot, your NLP model will recognize roughly 70% of incoming, in-scope questions. You’ll need to review the incoming questions, update the NLP model with new expressions, set it live and repeat until you reach 90% recognition. Remember, a chatbot is an AI project that uses confidence scores, which means nothing is ever black and white.

When a bot doesn’t understand someone, the reply can sometimes be, “Sorry, I don’t know the answer”.

The problem with that approach is that it doesn’t help the user, and it creates a negative experience. Imagine your Wi-Fi goes out and you contact your provider via their chatbot. If the bot doesn’t understand what you said and responds with “Sorry, I don’t know”, you now have two problems: your Wi-Fi doesn’t work and the bot doesn’t understand you.

This pairs with what I mentioned earlier about in-scope and out of scope expressions. If someone says something that’s out of scope, the bot needs to realize that, and provide an alternative solution.

Instead of saying, “Sorry, I don’t know”, the bot can direct the user to an FAQ page, provide a link to a video that walks the user through resetting the router, give the user the customer support phone number, or offer to connect the user to a live agent. There are many ways the bot can help the user get closer to a solution, even if it can’t solve the problem on its own. By helping the user find a solution, the bot adds value rather than adding frustration.

At Campfire AI, we always develop an elaborate flow of safety nets. That way, we make sure we’ve exhausted every possible way to help the user before wrapping up.

I never said it would be easy to build a chatbot that doesn’t suck. But, I promise that if you apply these four lessons, you’ll have a chatbot that will improve the customer experience, and help bring your users closer to a solution.